Watch us turn your wordy CVs into avatars using GenAI

TLDR:

- The Why: Bagged 800 OpenAI credits post-hackathon — rich in resources, yet bankrupt in direction.

- The Grind: Months of mixing art styles, tweaking AI models, and surviving the chaos of prompt engineering.

- The Cool Bit: Opus Avatar was born. Turn your CV into a unique avatar, share it with a click, and check out the diverse visuals of others’ professional journeys.

- The Takeaway: Who said CVs had to be boring? Not us. Got creative instead of practical, no regrets!

The Why: A Free Bag of OpenAI Credits

It's often said that without a clear problem to solve, capital alone won't create remarkable products.

We're here to prove that right.

After winning 800 OpenAI credits from coming 2nd in a bio hackathon sponsored by Entrepreneur First, we were both confronted with riches without purpose - we were paralysed by choice and had no idea what to build.

So why did we decide to generate avatars from CVs?

Josh was reviewing a friends CV and was subtly venting about the process during a catchup. Now, we're all familiar with inefficient online applications that promise to auto-fill forms after reading text from your CV. Why couldn't these platforms build out tech that would read and process text more efficiently? We realised it would be quite easy to create an interface with an LLM running in the backend that could process your text data and populate online applications with the data from your CV.

We decided to ignore that completely and generate fun looking avatars instead.

The How: A Journey Through Confused Art Styles and GPT Cringe

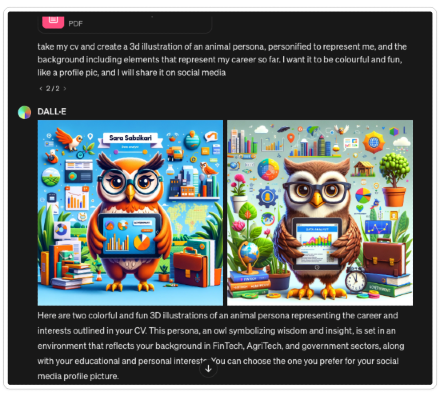

The entire process took us several months of iterating; between developing, designing, prompt engineering and testing (which btw, no one prepares you for how truly convoluted this process is). The viability of the image generation was tested using DALL-E via the ChatGPT interface. One of our first lessons, among many, was realising it's best not to leave 'make it look cool' open to interpretation for a Gen AI model.

It was clear that we needed to provide very specific instructions, from art style to image composition, and without this specificity our outputs would be incredibly varied. There we some things we could anticipate (for some reason the avatars were always owls) and other things we were unsure how to influence (like turning down the temperature of DALL-E). Identifying these variables to control, and to what degree, felt like an indeterminate problem and one that would threaten the likelihood of building something viable to begin with.

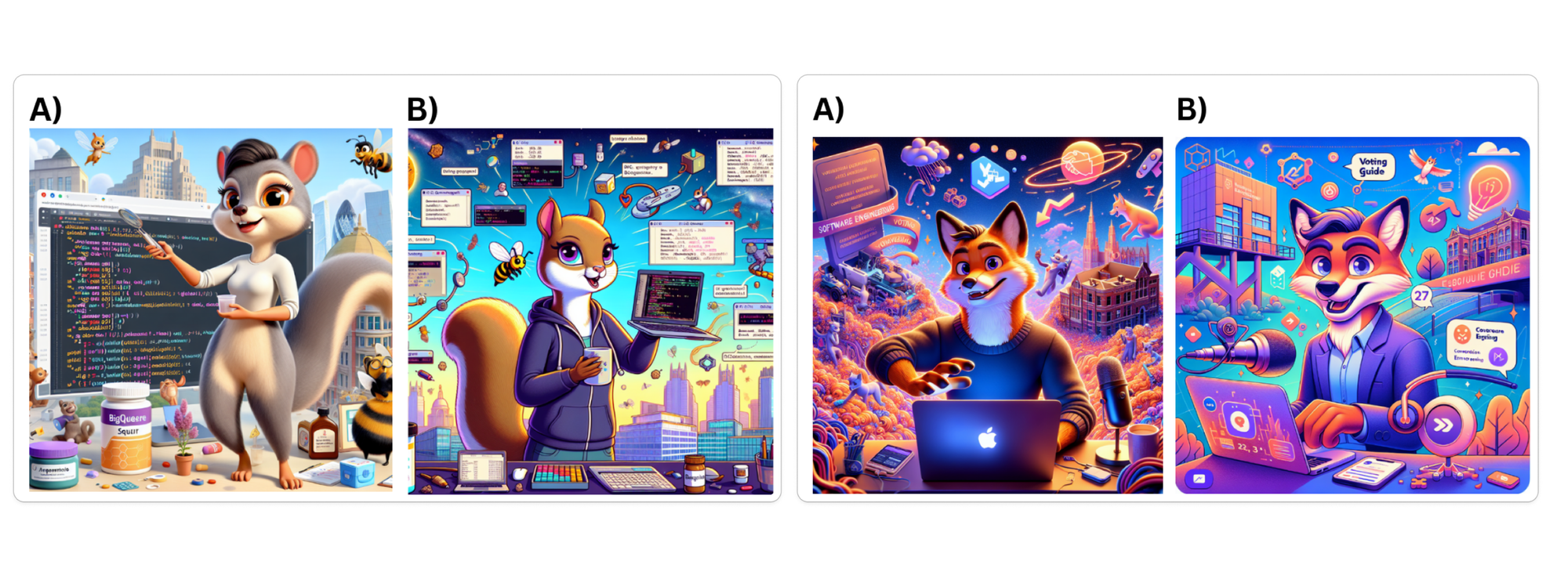

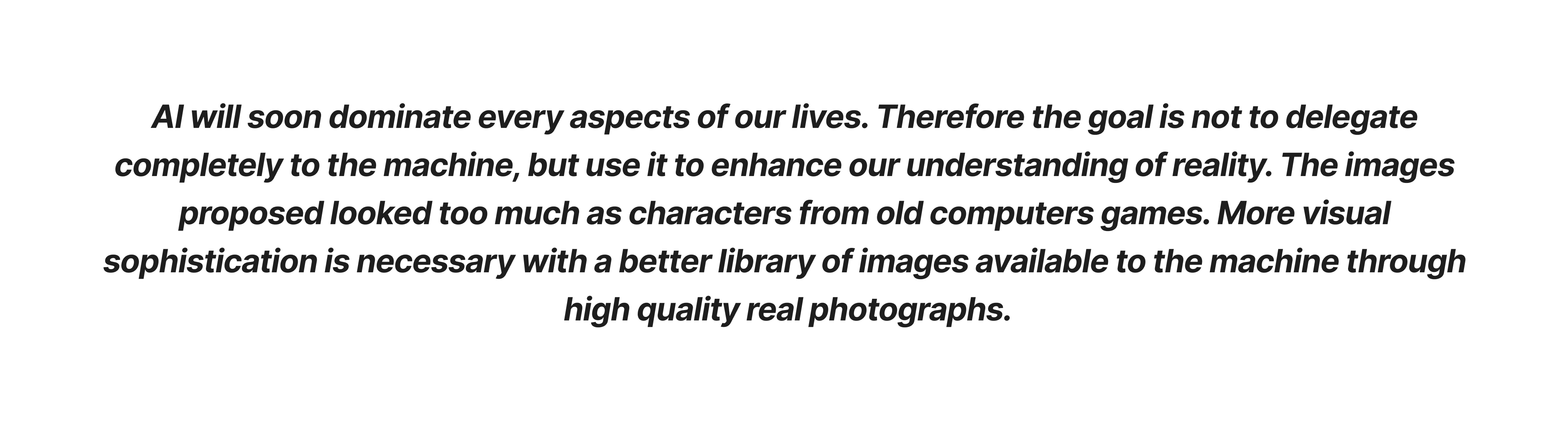

At first, we had very different style preferences - we were torn between futuristic and sci-fi visuals vs animated 3D avatars. We eventually left the decision to actual users, of which some (quite blunt) responses are shared further down.

After many sessions trialling different prompt styles, our patience wore thin. Josh decided to develop a prompt tester that would allow us to iterate through prompt engineering at a much faster pace. It's possible that the prompt tester alone is a more valuable development than our end product, but who doesn't love looking at an avatar of themselves?

Here's a link to the repo for those interested in developing something similar for their Gen AI projects.

The Backend

We experimented with a lot of different models and pipelines, taking a CV PDF in and returning a PNG image out. Our final design now drills down to 3 models and 2 core prompts.

The PDF is processed using Tesseract, an open source Optical Character Recognition (OCR) engine. Once Tesseract returns the contents of the CV as text, we then use GPT-4 Turbo (specifically gpt-4-1106-preview) with JSON response formatting. What this essentially means is that we ask GPT-4 to answer a bunch of questions about the user's CV, process the JSON output and use it to populate a predefined image prompt.

Getting our image prompt template right took way longer than we expected. This process really highlighted to us the difficulty of building robust and reliable prompts, to the extant that we are still not fully satisfied with the variability in image outputs, though it's much better than at the start.

The bottleneck in our pipeline is the DALLE-3 API as it currently takes around 1 minute - from the prompt being sent to getting a response. Once we have the image from DALLE-3 we then store it for the user, with some core information (such as name and job title - we never store CVs), and any social links the user has entered to be displayed with the avatar.

Did we lose a few hairs? Luckily, Josh still has a full head of hair.

Honest User Opinions

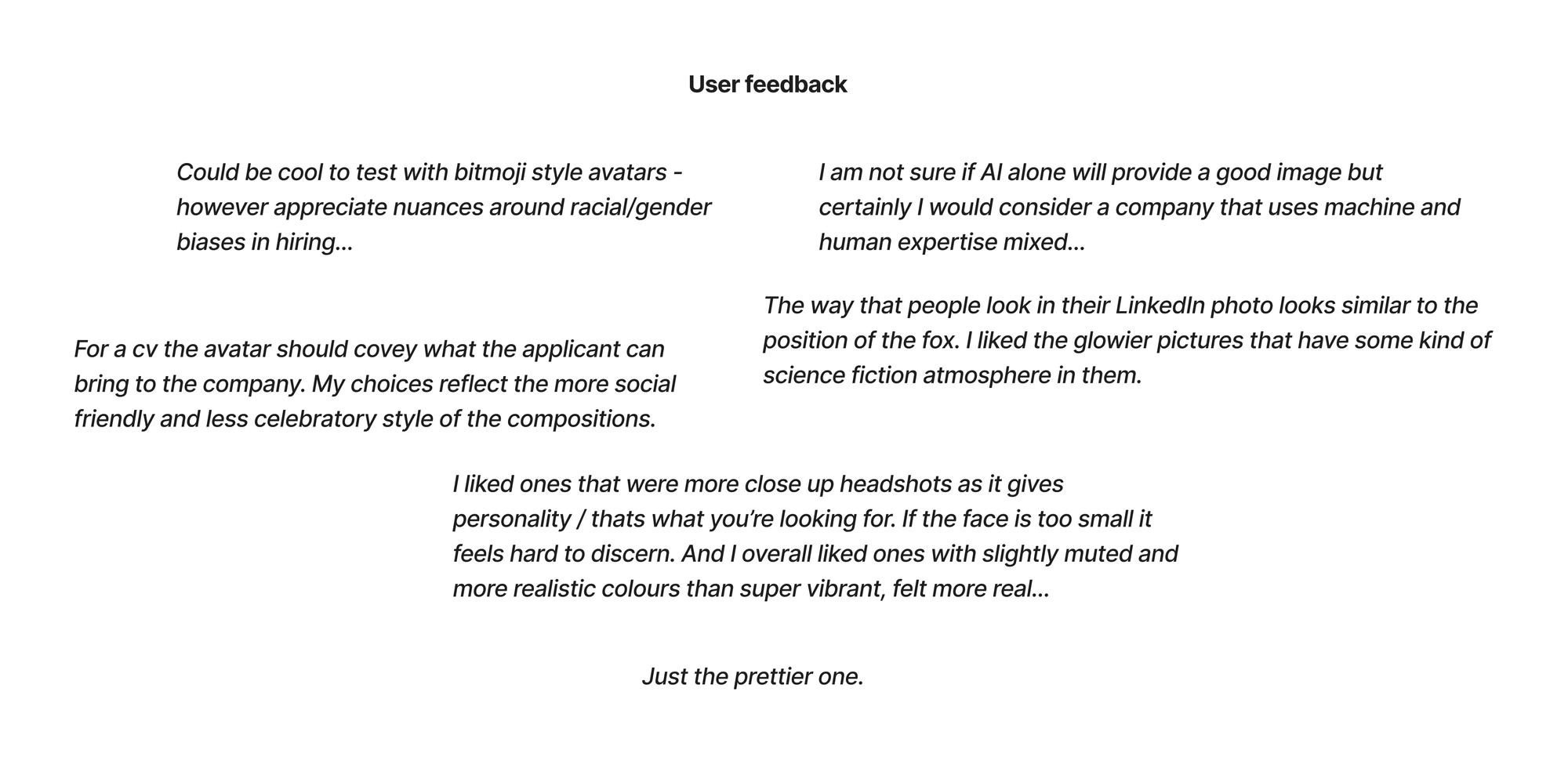

We took user feedback very seriously. Inspired by the short book Talking to Humans by Giff Constable on how to approach user testing, we interviewed some individuals who met our ideal users, and produced a survey for other users to provide anonymous feedback.

We asked users to provide feedback on two styles we were torn between; 3D or cartoonish avatars.

After codifying 51 responses, with 120 explanations, the most popular style was the 3D avatar. We're under the impression our users gave quite honest and thought-stimulating feedback, but we'll let you be the judge of that.

When asked how much users would pay for a product like this, our responses were varied... to say the least.

And of course, the comment that ultimately won this unstated competition:

The Result:

After several months of back and forth building, testing and reflecting, we now have an end product to share with you. Opus Avatar is now available for you to:

- Generate an avatar based on the contents of your CV (must be PDF).

- Share your socials for those who are interested in connecting after viewing your avatar.

- Explore the outputs of other individuals and see how truly diverse our careers and experiences are.

What do we think the value add is here?

Sometimes our ability to reflect on the totality of our experiences can be challenging. Most of us will intentionally or organically find ourselves in many different domains in our lifetimes. Our CVs are one way to capture what our professional blueprints look like, but it doesn't have to be the only way. We were inspired to visualise our unique combination of interests, skills and domains, for the sake of it.

And at the same time, we decided to abandon the question of whether this fundamentally solves anything, and start to enjoy the creative process of building with a fun idea in mind.

How to interpret your avatar

Acknowledgements

We want to thank Maria, Zowie, Josh's entire family (special shoutout to Tom), Kiki, Claire and George for entertaining and hopefully enjoying this process from start to finish.